Vijay Ravindiran of FloreoTech.com created lessons and simulations for autistic children to practice interfacing in challenging, real life situations like crossing the road or with police. Great insight into how user testing informs product decisions such as adding logic in the system for simulations to have more or less steps depending on the child’s actions. Very well-thought-out experience from the logic in interactions, to required fidelity of a simulation, to the multi-user experience (where the kid is in the headset and the teacher is managing the test on a tablet - each with an adapted interface into the simulation). VR is not only for empathy. It can impact people in a positive manner through practical (or magical) experiences.

A wonderful 360 film piece My Africa funded by the Tiffany Foundation. I want to say it was the best linear storytelling I have experienced immersively. Raised in Africa, I may be biased to this film.

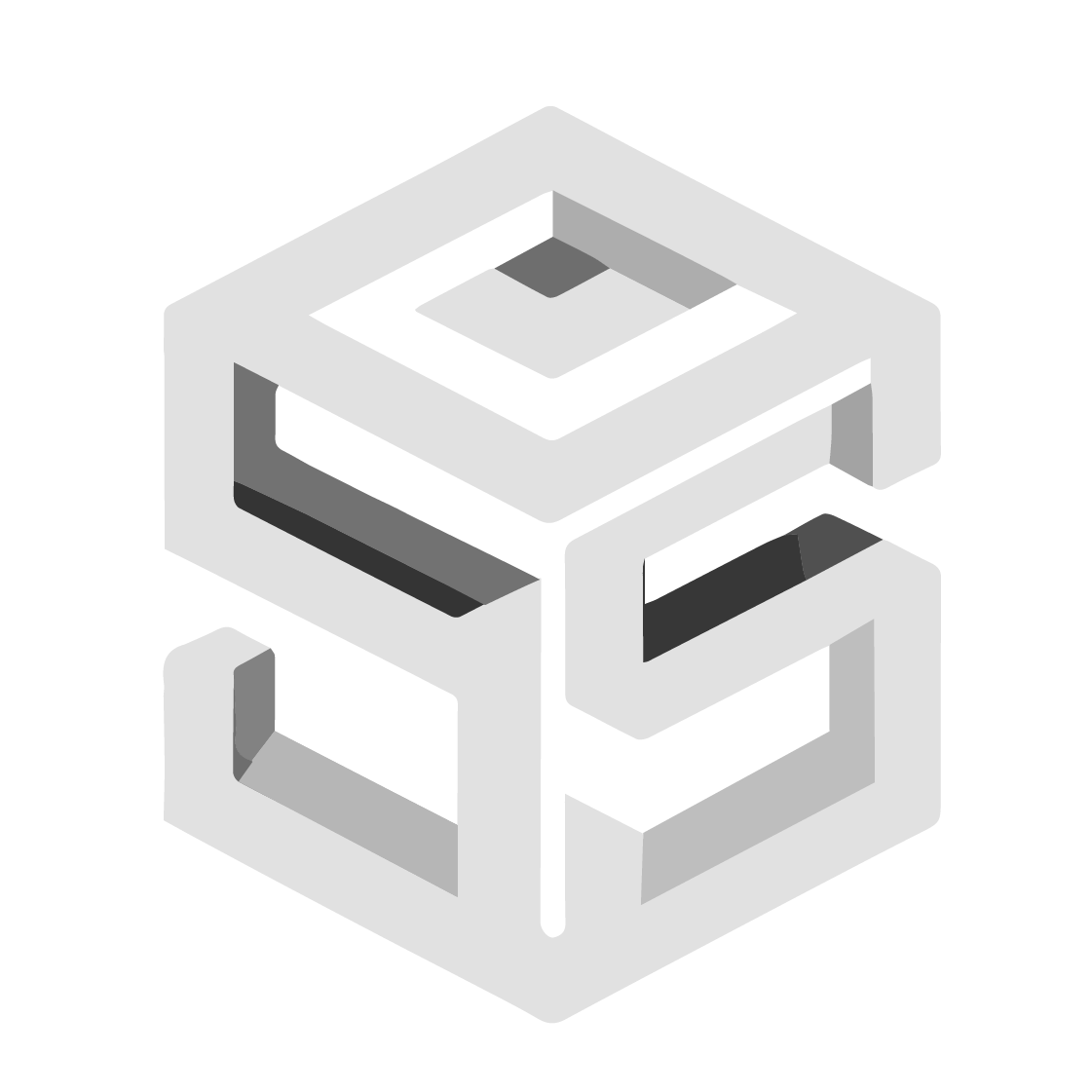

Brain Jam builds - functioning prototypes that explored the interplay between the brain and virtual or augmented experiences. Like exploring the implications of changing the camera view from 1st person to 3rd person to omniscient (planar view) to explore the potential cognitive impacts. Demonstrated in a simulation of collaborating with industrial robots. The brain jam produced 8 different builds (or simulations) built in 48 hours. Some exploring neural input like using EEG data via headband to control simulated physics (Mind bending). Another used eye tracking to encourage autistic children to make eye contact (beyond the silly “social media” lenses we currently use - this is the definition of a “social lens” encouraging and fostering real social interaction).

Gaia Dempsy - cofounder of Daqari on a new hierarchy of needs. Revising Maslow’s hierarchy of needs (which was based on ethnographic studies of Blackfoot culture) based on new input form first nation tribal councils using xR to envision the future and pass down cultural knowledge.

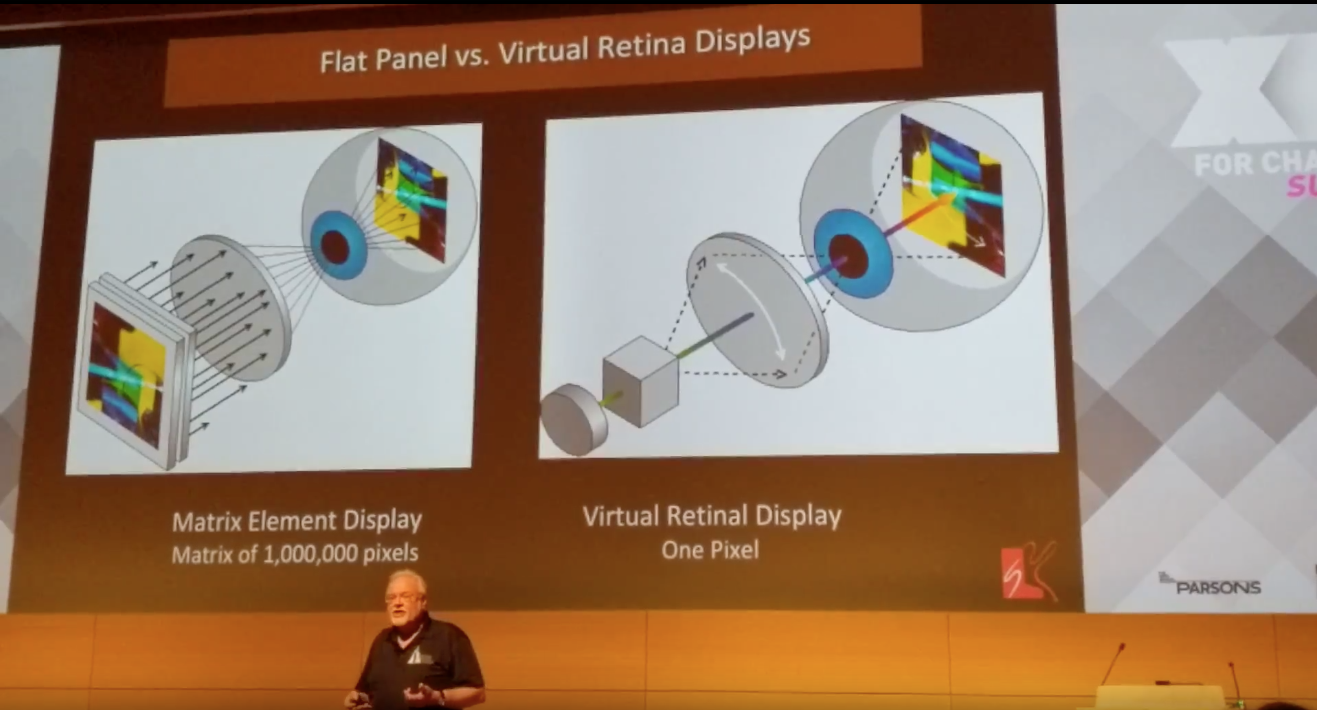

A more thorough origin of VR and guidelines for the future from Tom Furness who created developed cockpit systems and HUDs on HMDs. It gave pilots “the ability to do things that had never been done before.” Not because the display changed the physics of the aircraft, but because the it changed the perception of the aircraft and merged the pilot and the machine closer together. Spatial awareness (enemy location, your location and orientation) and state awareness (weapons systems, fuel, speed, etc.) merged into situational awareness that integrated into the pilot’s visual perception.

Private research from decades ago is still more advanced than what is on the market now. Which makes me wonder “What is being worked on now?”

Tom Furness of the Virtual World Society displaying visualization methods where pointing at the God's Eye View can highlight the same point in the first person view HUD.